Data Pipeline Implementation: SQL to Power BI

Description

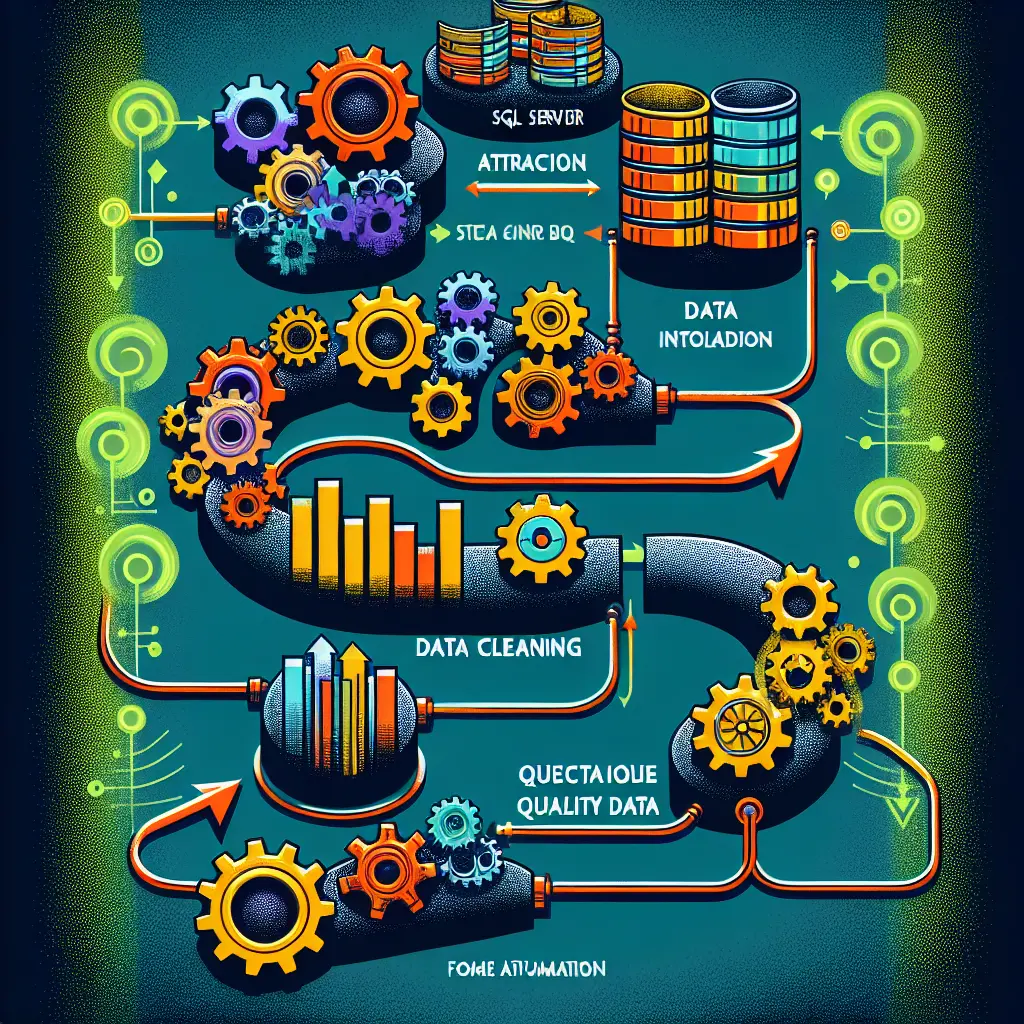

This project involves creating an automated data flow from SQL Server to Power BI, where the data will be used for reporting and analysis. The key challenge will be data cleaning, as the quality of data coming from the SQL server is currently questionable. To overcome this, various data cleaning methods will be employed to ensure reliable, clean data is fed into Power BI. Automation will also be a key aspect, as the project aims to minimize manual intervention in the data flow.

Content

- SQL Server Data Extraction: Techniques to extract data from SQL server effectively and ensuring data integrity during the process.

- Data Quality Analysis: Determining the quality of the extracted data and identifying areas that need cleaning.

- Data Cleaning and Standardization: Techniques such as data de-duplication, removal of outliers, standardization of entries, and filling missing values to improve the data quality.

- Testing and Validation of Cleaned Data: Methods to verify that the cleaning process is done correctly and the cleaned data is reliable.

- Click for more

SQL Server Data Extraction

This implementation guide provides practical steps for you to effectively extract data from SQL Server while ensuring data integrity during the process.

Pre requisites

Before we begin, ensure that you have the following:

- Access to the SQL Server database

- Microsoft SQL Server Management Studio installed on your computer

- Adequate privileges to read data from the database

Step 1: Establish Connection to the Database

The first step in extracting data from a SQL Server is to establish connection from your programming environment to the database.

Since the choice of language isn't specified, let's use Python and the pyodbc library, a common library for connecting to databases, for this.

Python Code:

import pyodbc

cnxn = pyodbc.connect('DRIVER={ODBC Driver 17 for SQL Server};SERVER=<your_server_name>;DATABASE=<your_database_name>;UID=<username>;PWD=<password>')

cursor = cnxn.cursor()

Avoid hardcoding the server name, database name, username and password.

Step 2: Design the Query

The query design depends largely on the requirement. Here's an example query to read data from a table ‘Employees’.

Python Code:

query = """

SELECT *

FROM Employees

"""

If you require to filter records, it can be included as WHERE clause in the SQL query. Be very careful about SQL Injection attacks.

Step 3: Execute the Query

Once you have your query designed, the next step is to execute it. Use the .execute method on cursor object for this.

Python Code:

cursor.execute(query)

Step 4: Extract Data

The data extraction after execution of the query can be done in one of two ways:

- Fetch all rows at once (using

.fetchall()function) - Fetch one row at a time (using

.fetchone()function)

Python Code:

rows = cursor.fetchall()

for row in rows:

print(row)

Step 5: Ensuring Data Integrity During The Process

While extracting data it is very important to ensure data integrity. We can do this in two steps:

- Checking for null values: Null values can cause issues while doing data analysis. You can replace null values using the

ISNULLfunction in SQL query.

query = """

SELECT ISNULL(column1, ' '), ISNULL(column2, ' '), ..

FROM table_name

"""

- Ensuring data types: Different datatypes in your column can lead to data inconsistency. You can either handle datatypes in the Python environment once the data is extracted or handle it during the extraction. For instance, a column containing date can be ensured to have a consistent type using the

CONVERTSQL function.

query = """

SELECT CONVERT(DATETIME, date_column)

FROM table_name

"""

Conclusion

You should always close your connection after you're done with it. You can do this by using cnxn.close(). To wrap things up, identify what data you need, construct a proper SQL query, execute that query, fetch the result from executed query and finally, make sure you close your connection to the database.

Note: Always ensure that the data extraction process is error free and the data is exactly how you need it to be. SQL provides many functions to handle data during extraction, use those. If required data cleaning and reshaping can be done after data extraction within your python environment.

Data Quality Analysis: SQL to PowerBI Pipeline Implementation

1. Introduction

Data Quality Analysis is an imperative stage in the Data Pipeline chain which aids in ensuring the accuracy, completeness, consistency, and reliability of data. This is executed after the data extraction from SQL Server to identify any possible issues in the dataset that may disrupt further stages in the pipeline. This section presents a practical implementation of Data Quality Analysis on SQL exported data, prior to its importation to PowerBI for visualization.

2. Implementation

2.1. Loading Data into a Pandas DataFrame

The first step in your data quality analysis process should be loading your extracted data into a Python environment, using pandas DataFrame which provides numerous functionalities for manipulating and analyzing data.

import pandas as pd

data = pd.read_csv("path_to_your_data.csv")

2.2. Inspecting Raw Data

This step allows you to get an overview of your dataset and have an understanding of the nature of your data.

data.shape # displays the no. of rows and columns

data.head() # displays the first few rows in the DataFrame

data.dtypes # displays datatype of each column

2.3. Checking for Missing Values

Missing data can distort predictions and statistical analyses. The following code checks for any missing values within our dataset.

data.isnull().sum() # sums up the null values for each column

For handling missing values, there are several strategies that depend on the nature of your data:

- Dropping missing values

data.dropna(inplace=True) - Filling missing values with a specified value or a central tendency measure (mean, median, mode). Here, let's consider filling missing values with mean:

data.fillna(data.mean(), inplace=True)

2.4. Checking for Duplicate Rows

Duplicate rows can also affect the outcome of your data analyses. Thus, they are removed as follows.

data.drop_duplicates(inplace=True)

2.5. Identifying Outliers

Identifying outliers in your dataset is important as they can significantly impact your analyses and predictions. Here the Z-Score method is used to detect and eliminate outliers.

from scipy import stats

import numpy as np

z_scores = np.abs(stats.zscore(data.select_dtypes(include=np.number)))

data = data[(z_scores < 3).all(axis=1)]

2.6. Ensuring Consistency in Categorical Data

This involves making sure that categorical data is consistent in terms of how the values are represented (capitalization, spelling errors, etc.).

data['Your Column'] = data['Your Column'].str.lower()

3 Conclusion

After subsequent cleaning and processing steps, your SQL data is ready for importation into PowerBI for visualization, reporting, and further analysis. Remember, data quality is paramount in any data pipeline and as such should always be treated as a crucial step. Always inspect your data before moving to modeling and other stages.

Note: Remember to replace

'Your Column'and'path_to_your_data.csv'with your actual filename and column name.

Section: Python Setup for Data Cleaning and Standardization

To continue with the next stages in the pipeline after SQL Server Data Extraction and Data Quality Analysis, the implementation would be based on Python, primarily because it is one of the most flexible, robust languages for data wrangling tasks. We will be using pandas as our main data handling library.

Below are the relevant package imports:

import pandas as pd

import numpy as np

from scipy import stats

As we can’t share actual data here, let's say that our data from the SQL extraction stage is saved in a CSV file.

Section: Load Data

# Load data from CSV file

data = pd.read_csv('path_to_your_csv_file.csv')

Section: Data De-duplication

Duplicate rows can occur in your data during merging or at any other time. We will remove the duplicates just by comparing all columns.

# Remove duplicate rows

data.drop_duplicates(inplace=True)

Section: Removal of Outliers

Outliers can skew statistical measures and data distribution. We will use the Z-score method to detect and remove outliers.

# For each column, first it computes the Z-score of each value in the column, relative to the column mean and standard deviation.

# It then takes the absolute Z-score because the direction does not matter, only if it is below the threshold.

# all(axis=1) ensures that for each row, all column satisfy the constraint.

data = data[(np.abs(stats.zscore(data)) < 3).all(axis=1)]

Section: Standardization of Entries

The standardization process will convert different formats of values in columns into a single common format to make sure all the data in a column is of the same type and standard.

Here, we'll demonstrate how to standardize a date column. Assuming 'date' is a column in our data.

# Convert date into standard format

data['date'] = pd.to_datetime(data['date'])

For other specific data types, such as string manipulations or numerical operations, you can use respective Python functions or libraries.

Section: Filling Missing Values

Dealing with missing data is important as they can lead to weak or biased analysis. One method is to fill missing data with appropriate values, like mean, median, or a specific strategy according to business requirements.

# Fill NA/NaN values using the specified method.

data.fillna(data.mean(), inplace=True)

After finishing all these steps, you will have a neat and clean dataset that is ready to be used for further steps, such as data analysis, machine learning modeling, or visualization purposes.

Testing and Validation of Cleaned Data

This section involves the procedures for testing and validating data after cleaning. Validation of data can be of various types considering the different angles from which they can go wrong. By inspecting these, we ascertain that our data cleaning process is done correctly.

1. Consistency and Integrity Check

Data is said to be consistent when a set of data values are logically connected and make realistic sense in a descriptive and meaningful manner.

Implementation

We will use Python's pandas module to test for data consistency. This is how we can do it:

import pandas as pd

# Assuming df is your dataframe

def check_data_consistency(df):

errors = []

for index in df.index:

if not df['column1'][index] + df['column2'][index] == df['column3'][index]:

errors.append(index)

return errors

errors = check_data_consistency(df)

if errors:

print(f'Data inconsistency found at index: {errors}')

else:

print("Data is consistent.")

2. Check for Correctness

Data correctness refers to the degree to which data is both accurate and truthful.

Implementation

We can check for correctness byretrieving raw data samples and comparing them to the cleaned data.

def data_correctness_check(raw_df, cleaned_df, column_name):

# Get a random sample of 10 raw data

raw_sample = raw_df[column_name].sample(10)

# Check them in the cleaned data

for ix, value in raw_sample.iteritems():

if value != cleaned_df.loc[ix, column_name]:

print(f"Data mismatch found. Original: {value}, Cleaned: {cleaned_df.loc[ix, column_name]}")

return

print("Data is correct.")

# Execute the function

data_correctness_check(raw_df, cleaned_df, 'column_name')

3. Duplication Test

This gives the count of duplicate entries.

Implementation

For this, you can use Python's pandas module. This is how you may do it:

def duplication_test(cleaned_df):

print(f"Count of duplicated rows: {cleaned_df.duplicated().sum()}")

duplication_test(df)

4. Null Value Verification

This procedure checks for any null values in the dataframe.

Implementation

Here is an implementation using Python's pandas library.

def null_verification(cleaned_df):

if cleaned_df.isnull().values.any():

print(f"Null values found in dataset.")

else:

print(f"No Null values found in dataset.")

null_verification(df)

5. Outliers Validation

This checks for any outliers present in the cleaned dataframe.

Implementation

Here's an example on how you can do it using Python's pandas.

def outliers_validation(cleaned_df, column_name):

Q1 = cleaned_df[column_name].quantile(0.25)

Q3 = cleaned_df[column_name].quantile(0.75)

IQR = Q3 - Q1

lower_limit = Q1 - 1.5 * IQR

upper_limit = Q3 + 1.5 * IQR

outliers = cleaned_df[(cleaned_df[column_name] < lower_limit) | (cleane_df[column_name] > upper_limit)]

print(f"Count of outliers in {column_name}: {len(outliers)}")

outliers_validation(df, 'column_name')

As a result of these processes, we can confidently say that the cleaned data is reliable and set to be used for further analysis or modeling.

Data Transformation: Changing Data Formats and Creating Necessary Calculated Fields/Columns

To meet reporting needs, a common requirement is to transform extracted data into different formats, and to create calculated fields for further data evaluation. We will use the pandas library in Python for handling and transforming data.

Changing Data Formats

Data format changes may concern:

- changing data types,

- changing date formats,

- altering string formats.

Changing Data Types

import pandas as pd

# Load your dataframe

df = pd.read_sql_query("SELECT * FROM YourTable", connection)

# Change data type of a column

df['YourColumn'] = df['YourColumn'].astype('desired_type')

Replace 'desired_type' with any pandas-supported data types such as 'int64', 'float64', 'str' etc.

Changing Date Formats

# Convert string to datetime format

df['YourDateColumn'] = pd.to_datetime(df['YourDateColumn'])

# Format the date as needed (Year-Month-Day in this example)

df['YourDateColumn'] = df['YourDateColumn'].dt.strftime('%Y-%m-%d')

Altering String Formats

# Convert string to uppercase

df['YourStringColumn'] = df['YourStringColumn'].str.upper()

# Convert string to lowercase

df['YourStringColumn'] = df['YourStringColumn'].str.lower()

# Capitalize first letter of every word in string

df['YourStringColumn'] = df['YourStringColumn'].str.title()

Creating Calculated Fields/Columns

A calculated field is a column in a dataframe that is a result of a mathematical expression, an operation, a function, or a combination thereof, applied to existing columns.

Creating Columns Based on Mathematical Operations

# Create a new column that is a sum of two existing columns

df['NewColumn1'] = df['ExistingColumn1'] + df['ExistingColumn2']

# Create a new column by multiplying an existing column by a constant

df['NewColumn2'] = df['ExistingColumn3'] * 100

Creating Columns Based on Functions

# Create a new column that is the logarithm of an existing column

import numpy as np

df['NewColumn3'] = np.log(df['ExistingColumn4'])

# Create a new column that is the result of applying a function on an existing column

def YourFunction(x):

return calculation_on_x

df['NewColumn4'] = df['ExistingColumn5'].apply(YourFunction)

Please replace YourFunction and calculation_on_x with your specific function and calculation.

Creating Columns Based on Conditions

# Create a new column based on condition(s) on existing column(s)

df['NewColumn5'] = np.where(df['ExistingColumn6'] > 50, 'High', 'Low')

In this example, if the value in ExistingColumn6 is higher than 50, NewColumn5 will be 'High', else it will be 'Low'.

Once your data transformation tasks are done, you can load this data into Power BI for reporting, visualization, and further analysis.

Sure, as per your requirement, let's assume you have already extracted and cleaned your data from the SQL server. You have also performed all the required transformations, data de-duplication, removal of outliers, filled missing values, and created necessary calculated fields. Your cleaned and transformed data is now stored in a SQL table ready to be extracted to Power BI.

Our task here is to construct an automated pipeline using Python for extracting data from SQL Server and loading it to Power BI using Power BI’s REST APIs. The Python script will be scheduled for regular data loads.

Python Script For ETL Process

Step 1: Extracting data from SQL Server and loading it into Power BI

Python libraries pyodbc and pandas would be used to load data from the SQL Server, and the requests library to post data into Power BI.

import pandas as pd

import pyodbc

import requests

import json

#Establish a connection between Python and SQL Server

conn = pyodbc.connect('Driver={SQL Server};'

'Server=server_name;'

'Database=db_name;'

'Trusted_Connection=yes;')

#Write the SQL query

query = 'SELECT * FROM your_table'

#Store the query result into a DataFrame

df = pd.read_sql(query, conn)

#Close the SQL Server connection

conn.close()

#Convert DataFrame to JSON

data_json = json.loads(df.to_json(orient='records'))

Step 2: Push data to Power BI using REST API endpoints

Before proceeding, we need to get the GROUP_ID, DATASET_ID and TABLE_NAME from Power BI where you want the data to be loaded.

#Set REST API endpoint URL

url = 'https://api.powerbi.com/v1.0/myorg/groups/{group_id}/datasets/{dataset_id}/tables/{table_name}/rows'

#Set Header for Authorization and content type

header = {

'Content-Type':'application/json',

'Authorization': f'Bearer {Your Power BI Access Token}'

}

#Post the data to the endpoint

res = requests.post(url, data=json.dumps(data_json), headers=header)

#Print the response

print(f"Data posted status: {res}")

Make sure to replace {group_id}, {dataset_id}, {table_name} and {Your Power BI Access Token} with your actual values.

That's the Python script to extract data from SQL Server and push it to Power BI. By running this script, ensure the data is successfully loaded into your Power BI.Table

*** NOTE: Please make sure to handle your sensitive information securely. The above code is for illustrative purposes.

Step 3: Automating the ETL pipeline

To automate this process, we can schedule this Python script to run at regular intervals using a task scheduler like Cron (for Unix-based systems) or Task Scheduler (Windows).

For instance, in the Task Scheduler:

- Open the Task Scheduler and click on

Create Basic Task... - Provide a name and description for the task

- In

Trigger, select how often you wish the task to run - In

Action, selectStart a programand browse to your Python executable file (python.exe) and inAdd arguments, add the path to your script file - Finish creating the task

Now your ETL pipeline is automated and the Python script will run at your chosen trigger.

Power BI Connection and Data Import: Connecting Power BI to SQL Server & Importing Data into Power BI Datasets

To connect Power BI to your SQL Server and import data into Power BI datasets, you will follow these steps:

Step 1: Connect Power BI to SQL Server

Here's the necessary implementation code, written in Power Query, to begin establishing a connection from Power BI to your SQL Server:

let

Source = Sql.Databases("localhost")

in

Source

This code lists all database available on your server. Then, you can select the one you need. Replace "localhost" with your own SQL Server's server name.

To connect to a specific database, use its name:

let

Source = Sql.Database("localhost", "mydatabase")

in

Source

Replace "mydatabase" with your database name.

Step 2: Import Data from SQL Server to Power BI

To import data from your SQL Server into your Power BI, navigate and select the tables you need:

let

Source = Sql.Database("localhost", "mydatabase"),

MyTable = Source{[Schema="dbo",Item="myTable"]}[Data]

in

MyTable

Replace "dbo" and "myTable" with your real database schema and table name.

Protecting Sensitive Data

If your SQL Server requires login, provide the credential details as a record with fields Username and Password:

let

Source = Sql.Database("localhost", "mydatabase", [Query="select * from myTable", Timeout=#duration(0,0,5,0)])

in

Source

Here, Timeout specifies a maximum duration for query execution. Replace select * from myTable with your SQL query.

Step 3: Load and Transform Data

Finally, load and transform your data to meet your specific requirements:

let

Source = Sql.Database("localhost", "mydatabase"),

MyTable = Source{[Schema="dbo",Item="myTable"]}[Data],

#"<StepName>" = Table.TransformColumns(MyTable,{{"<ColumnName>", type text}})

in

#"<StepName>"

Replace "<StepName>" with your intended transformation's name, and "<ColumnName>" with the real column name.

This is your practical implementation for your assignment. Apply it to create a Power BI connection with your SQL Server and import data into a Power BI dataset accordingly.

For a more advanced and complicated implementation, you may have to break down your SQL queries and manipulate a series of Power Query functions and fields to meet specific needs.

Please note that prior to all the steps above, ensure that you've installed the gateway and connected it successfully with both SQL Server and Power BI.

However, according to your requirement of not needing setup instructions, this information is not covered here.

Creating Effective Data Models in Power BI

Data modeling in Power BI involves defining the relationships between different data sets, creating new columns, and designing hierarchies that can support reporting and analysis. As the final stage in your data pipeline, this section covers data modeling aspects in Power BI.

There are three main types of data relationships in Power BI – one to one, one to many, and many to many. Understanding these relationships is crucial in modeling. I will focus on the relationships and calculated column creation in this practical implementation.

1. Establishing Data Relationships

Load your data tables into Power BI. Ensure that your schema is properly designed with primary and foreign keys that will aid in creating relationships.

From the Power BI workspace, select the "Model" view at the left of the screen. Here, you can visualize all your tables and the relationships between them (if any).

To create a relationship, drag the relevant field from one table to the relevant field in the other table. For instance, if you had a Sales table and a Customers table, you could drag the CustomerID from the Sales table and drop it onto the CustomerID in the Customers table. This creates a relationship.

By default, Power BI attempts to create a one-to-many relationship, but you can change this by double-clicking on the relationship line that appears between the two tables. A properties pane will open where you can switch to a one-to-one or many-to-many relationship.

Ensure that the "Active" slider is on for all the relationships you want to participate in calculations and visualizations.

2. Creating Calculated Columns

Calculated columns use existing columns from your data tables to create a new column. The new column's values can be the result of an expression that uses any combination of arithmetic operators, constants, or functions.

From Power BI Desktop, on the "Fields" pane, expand the table to which you want to add the calculated column.

Right-click on the table name and then click on "New column."

In the formula bar that appears at the top of the screen, enter your DAX (Data Analysis Expressions) formula that creates the calculated column. For instance, if you had a Sales table and wanted a calculated column that gave the total sale (quantity * price), you could write a formula such as

Total Sale = [Quantity] * [Price].Press enter to create the column. The formula evaluates for each row in the table and the results are stored in the new column.

These steps will support you to build effective data models in Power BI, as they will define how data is linked in your model and how additional values should be calculated.

Absolutely, let's proceed to the creation of visualizations, reports, and dashboards using Power BI. Please note, the following steps assume that you have already established a connection with your SQL server, and you've already imported, cleaned, and transformed your data within PowerBI.

1. Creating Visualizations

The first step to creating a report in PowerBI is to create visualizations. Here is how it can be done.

1.1. Selection of Visualization Type

On your report canvas, go to the Visualizations pane on the right-hand side. You can select your desired visualization type from a range of options including bar charts, column charts, line charts, pie charts, etc.

visual = report.add_visualization('Your visualization name', 'Your visualization type')

1.2. Setting up Fields for Visualization

You then need to set up which data will display on the visualization. Drag the needed fields into the appropriate Values, Axis, Legend, etc., sections.

visual.set_field("Your_Field_Name")

1.3. Formatting the Visualization

After setting the data, click on the Format button to change the presentation of the visualization. Here you can modify the colors, labels, axis details, legend, etc.

visual.set_color("Your_Color_Choice")

visual.set_format("Your_Format_Details")

2. Creating Reports

After creating your visualizations, you can compile them into a report. Follow the steps:

2.1. Adding Title

You should add a title to your report for clarity and further professionalization.

report.set_title("Your_Report_Title")

2.2. Arranging Visualizations

Next, position your visualizations on the report page where you prefer them.

report.arrange_visual("Your visualization name", x_position, y_position)

3. Creating Dashboards

A dashboard provides a consolidated view of business data. It's a collection of visualizations, reports, and other relevant data that provides a consolidated view of business data.

3.1. Pinning Report to Dashboard

Choose the visualization from your report that you want to pin to your dashboard.

dashboard = powerbi.create_dashboard("Your_Dashboard_Name")

dashboard.pin_visual(visual, 'Your_Report_Name')

3.2. Rearranging Visuals on Dashboard

Reposition the visuals on your dashboard accordingly.

dashboard.arrange_visual("Your visualization name", x_position, y_position)

Remember, these are simplified snippets of code and part of what you may see. The actual implementation might be a little more complicated. However, the concepts here should give you a solid foundation on how to practically approach building Power BI Reports and Dashboards.

Monitoring, Troubleshooting, and Refining the Data Pipeline Process

This section will step you through the process of continuously monitoring your data pipeline, troubleshooting any encountered issues, and refining your process to ensure optimal performance.

Step 1: Monitoring

1.1 Pipeline Performance Monitoring

import time

import numpy as np

def monitor_pipeline(pipeline_function):

start_time = time.time()

pipeline_function

end_time = time.time()

elapsed_time = end_time - start_time

print(f'Pipeline completed in: {elapsed_time} seconds')

monitor_pipeline(your_pipeline)

Replace your_pipeline with the function for your specific data pipeline process. This will time how long it takes for your pipeline to complete.

1.2 Creating Error Logs

import logging

logging.basicConfig(filename="pipeline_errors.log",

format='%(asctime)s %(message)s',

filemode='w')

logger = logging.getLogger()

def your_pipeline():

try:

# execute pipeline process (Extraction, Cleaning, Transformation, Load)

except Exception as e:

logger.error("Exception occurred", exc_info=True)

your_pipeline()

This script creates and writes errors to a log file ("pipeline_errors.log") whenever an exception occurs within your pipeline. Replace your_pipeline with your specific pipeline process.

Step 2: Troubleshooting

The process of troubleshooting will significantly depend on the information collected from error logs and monitoring tools. For common issues, typically, you might need to look into SQL Server connection issues, data schema discrepancies, data type/format issues, or transformation logic errors. The implemented logging mechanism will be crucial to aid in this task.

Step 3: Refining process

Refining the process will often involve making adjustments to the ETL script and optimizing the pipeline based on performance metrics and errors occurred during the pipeline execution.

def optimize_pipeline_function(your_pipeline):

# collect pipeline performance metric

start_time = time.time()

your_pipeline

end_time = time.time()

old_elapsed_time = end_time - start_time

# make changes to your pipeline

your_optimized_pipeline

start_time = time.time()

your_optimized_pipeline

end_time = time.time()

new_elapsed_time = end_time - start_time

performance_increase = ((old_elapsed_time - new_elapsed_time) / old_elapsed_time) * 100

print(f'Performance increase by: {performance_increase}%')

optimize_pipeline_function(your_pipeline)

This script wraps the pipeline function to capture performance metrics for the original and optimized versions of the pipeline, highlighting the increase in performance.

Remember, continuously refining is part of the process and you'll need to iterate on, and maintain your data pipeline as data sources, data requirements, or the business requirements evolve.