Thread

SQL Weekly Sales Summary; OVERVIEW: This SQL code extract demonstrate...

This SQL code extract demonstrates how to generate a weekly sales summary report using data from the `salesorderheader` and `salesorderdetail` tables. The code calculates the total sales for each week by multiplying the `orderqty` and `unitprice` col...

SQL Weekly Sales Summary; OVERVIEW: This SQL code extract demonstrate...

Description

This SQL script calculates the total sales for each week by joining the salesorderheader and salesorderdetail tables and grouping the results by the start date of the week. It uses the DATE_TRUNC function to truncate the order date to the beginning of the week and performs the SUM operation to calculate the total sales. The script is structured with clear explanations and can be used as a reference for creating similar queries. For further learning and optimization of SQL query creation, you can explore the SQL courses available on the Enterprise DNA Platform.

NAME: SQL Code Visualization with DOT Language; OVERVIEW: The input presents a SQL code snippet that can be visualized using the DOT language. It uses two nodes to represent tables ("salesorderheader" and "salesorderdetail") and shows their attributes within the nodes. The 'Join on' operation is represented by a bi-directional arrow between corresponding attributes of both tables. The input also provides an explanation of the SQL code, highlighting the use of functions, join operations, and group/order by operations. It further recommends courses for expanding one's knowledge of SQL code and improving visualization skills using the DOT language.; DESCRIPTION: The input provides a detailed explanation of a SQL code snippet and suggests visualizing it using the DOT language. It showcases the use of graphical representation to illustrate the tables, their attributes, and the join operation. The explanation emphasizes the importance of understanding SQL code and highlights resources available for learning SQL and DOT visualization techniques. This input serves as a helpful guide for individuals looking to enhance their SQL skills and improve their ability to visually represent SQL code.

NAME: Analysis of SQL Query Complexity and Optimization; OVERVIEW: The analysis begins by noting that accurately evaluating the time complexity of the SQL query using Big-O notation is not possible due to its dependency on the SQL server's parsing and execution. However, estimations can be made based on fundamental operations. The main operations identified are Joining, Aggregation functions (Sum, Group By, Order By), and Date truncating. The worst-case time complexity of the Join operation is O(n*m), where n is the number of rows in the first table and m is the number of rows in the second table. The 'Group By' and 'Order By' operations have a best-case time complexity of O(n log n) and a worst-case of O(n^2), while the Date truncating operation takes linear time of O(n). The worst-case time complexity is estimated to be O(n^2 * m^2). Regarding space complexity, it is primarily determined by the memory needed to store intermediate results of operations. Assuming n as the total number of records after the join operation and m as the total number of groupings created by 'Group By', the space complexity is estimated as O(n * m). The analysis also suggests several optimizations, including creating an index on the relevant column, storing truncation results temporarily, and reducing rows through where conditions. It emphasizes the importance of examining the execution plan and offers resources for further learning.; DESCRIPTION: The input provides a detailed analysis of the time and space complexity of a SQL query. It estimates the worst-case time complexity of the query based on the main operations involved and provides recommendations for optimizing the query's performance. The analysis suggests various strategies, such as creating indexes, storing temporary results, and reducing rows, to improve efficiency. It also highlights the significance of understanding the execution plan and offers additional learning resources for further exploration. This input serves as a valuable resource for individuals seeking to optimize SQL queries and enhance query performance.

NAME: Data Modeling and Semantic Layer Guide; OVERVIEW: This guide explains the concepts of data modeling and the semantic layer, highlighting their differences and the roles they play in data management. It covers the process of data modeling and the benefits of the semantic layer in providing a simplified and user-friendly interface for accessing and analyzing data. It also discusses the tools commonly used for data modeling and creating the semantic layer. Whether you are a data professional or a business user, this guide will help you understand how these concepts contribute to effective data management and analysis.; DESCRIPTION: The input offers a comprehensive guide that explains data modeling and the semantic layer in data management. It describes the process of data modeling and emphasizes the benefits of the semantic layer in providing a user-friendly interface for data access and analysis. The guide also mentions the tools commonly used for data modeling and creating the semantic layer. It highlights the relevance of these concepts for both data professionals and business users and underscores their importance in effective data management and analysis. This input serves as a valuable resource for individuals looking to gain a deeper understanding of data modeling and the semantic layer.

NAME: Importance of Semantic Layer and Data Modeling in AI and ML; OVERVIEW: The input emphasizes the continued importance of the semantic layer and data modeling in the realm of AI and ML. The semantic layer acts as a bridge between the technical database and end-users, providing a user-friendly interface for data access and analysis. Data modeling, on the other hand, involves organizing and structuring data to facilitate efficient analysis and decision-making. These concepts simplify data access for non-technical users, optimize query performance, ensure data integrity, and maintain consistency. Additionally, the integration of the semantic layer and data modeling with AI and ML can enhance these initiatives by providing a well-structured and organized data environment. Overall, this input emphasizes the ongoing relevance of these concepts in the data analysis landscape and encourages individuals to acquire these skills for effective data utilization. DESCRIPTION: The input highlights the importance of the semantic layer and data modeling in the context of AI and ML. It explains how the semantic layer bridges the gap between technical databases and end-users, providing a user-friendly interface for data access and analysis. Data modeling, on the other hand, involves organizing and structuring data to enable efficient analysis and decision-making processes. The input emphasizes that these concepts are essential for simplifying data access, optimizing query performance, ensuring data integrity, and maintaining consistency. Additionally, it highlights the role of the semantic layer and data modeling in enhancing AI and ML initiatives by offering a well-structured and organized data environment. This input serves as a reminder of the ongoing significance of these concepts in the data analysis field and encourages individuals to develop their skills in these areas.

SQL code for Weekly Sales Summary Report

Tool: Code Explainer

Created: 01/01/2024

Prompt

SELECT

DATE_TRUNC('week', soh.orderdate) AS week_start_date,

SUM(sod.orderqty * sod.unitprice) AS total_sales

FROM

{{ Table("salesorderheader") }} soh

JOIN

{{ Table("salesorderdetail") }} sod ON soh.salesorderid = sod.salesorderid

GROUP BY

week_start_date

ORDER BY

week_start_date;

This SQL code extract is designed to provide a summarized report of total sales per week. The code uses PostgreSQL's DATE_TRUNC function, JOIN operation, and aggregate functions like SUM.

Let's break this code down:

'SELECT' Clause

SELECT

DATE_TRUNC('week', soh.orderdate) AS week_start_date,

SUM(sod.orderqty * sod.unitprice) AS total_sales

This part of the code is responsible for defining which information will be extracted from the database.

DATE_TRUNC('week', soh.orderdate) AS week_start_date: Here, the DATE_TRUNC function is used to get the first day of the week (by default Monday) from theorderdate. The 'week' parameter instructs the function to truncate theorderdatedown to the week, effectively standardizing the time to the start of the week.SUM(sod.orderqty * sod.unitprice) AS total_sales: This calculates the total sales by multiplyingorderqtyandunitpriceand then summarizing it with theSUMfunction.

'FROM' and 'JOIN' Clauses

FROM

{{ Table("salesorderheader") }} soh

JOIN

{{ Table("salesorderdetail") }} sod ON soh.salesorderid = sod.salesorderid

This part of the code indicates the source of the data needed for the retrieval operation.

FROM {{ Table("salesorderheader") }} soh: This specifies that the data is being retrieved from thesalesorderheadertable.sohis an alias used for brevity.JOIN {{ Table("salesorderdetail") }} sod ON soh.salesorderid = sod.salesorderid: This statement joins thesalesorderheadertable with thesalesorderdetailtable based on the conditionsoh.salesorderid = sod.salesorderid.

'GROUP BY' and 'ORDER BY' Clauses

GROUP BY

week_start_date

ORDER BY

week_start_date;

GROUP BY week_start_date: This statement organizes the selected data into groups by each week's start date. This is necessary for aggregate functions such asSUMto operate correctly.ORDER BY week_start_date;: Finally, theORDER BYclause organizes the output rows by the week start date in ascending order.

Please note that {{ Table("") }} is a placeholder and it should be replaced with the actual table name, if you want to run the query in any SQL-based database management system.

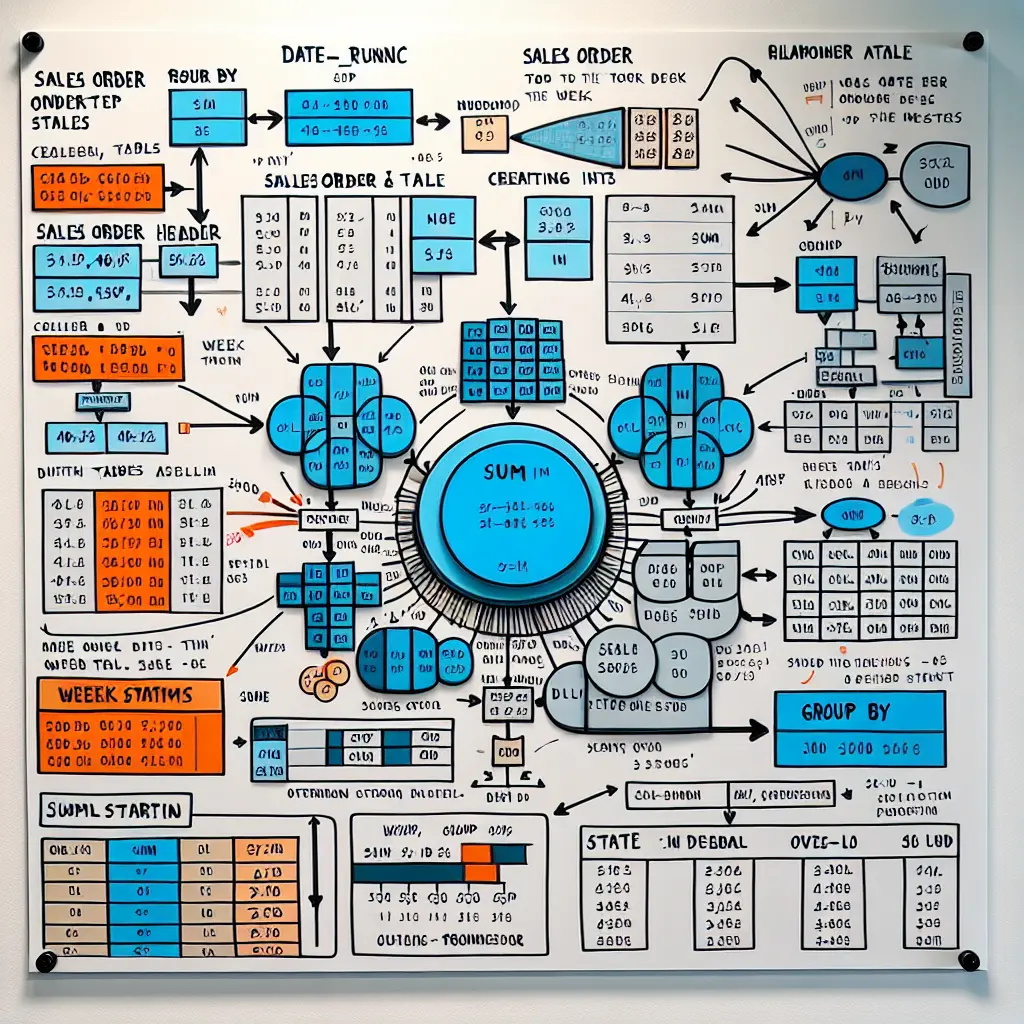

Visual Representation of SQL Code

Tool: Code Visualizer

Created: 01/01/2024

Prompt

SELECT

DATE_TRUNC('week', soh.orderdate) AS week_start_date,

SUM(sod.orderqty * sod.unitprice) AS total_sales

FROM

{{ Table("salesorderheader") }} soh

JOIN

{{ Table("salesorderdetail") }} sod ON soh.salesorderid = sod.salesorderid

GROUP BY

week_start_date

ORDER BY

week_start_date;

Visual Representation of SQL Code

The given SQL code snippet can be visualized using the DOT language, which is perfect for describing graphs.

The code forms relations between two tables: "salesorderheader" and "salesorderdetail".

Here is a basic representation using DOT language:

digraph G {

node [shape=record];

salesorderheader [label="salesorderheader|<f0> salesorderid|<f1> orderdate"];

salesorderdetail [label="salesorderdetail|<f0> salesorderid|<f1> orderqty|<f2> unitprice"];

salesorderheader:f0 -> salesorderdetail:f0 [label="JOIN ON", dir=both];

}

Here, we have used two nodes, each representing a table in the SQL code. The attributes of the tables are represented within the nodes.

salesorderheadernode corresponds to the "salesorderheader" table with attributes assalesorderidandorderdate.salesorderdetailnode corresponds to the "salesorderdetail" table with attributes assalesorderid,orderqty, andunitprice.

The 'Join on' operation is represented by a bi-directional arrow between salesorderid of both tables.

Understanding SQL Code

DATE_TRUNC('week', soh.orderdate) AS week_start_date: This is truncating the 'orderdate' to the nearest week and is saved as 'week_start_date'.SUM(sod.orderqty * sod.unitprice) AS total_sales: This is calculating total sales by multiplying quantity with unit price for each line item and then summing them up.JOINoperation is done on the 'salesorderid' of both tables 'salesorderheader' and 'salesorderdetail'.- The

GROUP BYandORDER BYoperations are performed on 'week_start_date' to get the result in an orderly manner.

Recommended Courses

For a deeper understanding of SQL code and to further improve your visualization skills using DOT language, I recommend you to refer to some of the best courses provided on the Enterprise DNA Platform.

SQL Script for Weekly Sales Total

Tool: Documentation Generator

Created: 01/01/2024

Prompt

SELECT

DATE_TRUNC('week', soh.orderdate) AS week_start_date,

SUM(sod.orderqty * sod.unitprice) AS total_sales

FROM

{{ Table("salesorderheader") }} soh

JOIN

{{ Table("salesorderdetail") }} sod ON soh.salesorderid = sod.salesorderid

GROUP BY

week_start_date

ORDER BY

week_start_date;

Documentation

This SQL script is used to fetch the total sales for each week based on sales order information.

Query Explanation

SELECT

DATE_TRUNC('week', soh.orderdate) AS week_start_date,

SUM(sod.orderqty * sod.unitprice) AS total_sales

FROM

{{ Table("salesorderheader") }} soh -- Table Alias

JOIN

{{ Table("salesorderdetail") }} sod ON soh.salesorderid = sod.salesorderid -- Joining on salesorderid

GROUP BY

week_start_date --Grouping results by week_start_date

ORDER BY

week_start_date; --Ordering results by week_start_date

- The query is selecting data and performing computations to aggregate the sales information

- The

DATE_TRUNC('week', soh.orderdate)function is used to truncate the order date to the beginning of the week. This essentially gives us the week start date for each record. - The

SUM(sod.orderqty * sod.unitprice)operation is calculating the total sales for each week. It does this by multiplying the order quantity (orderqty) by the unit price (unitprice) for each record, and then summing these results. - The

FROM {{ Table("salesorderheader") }} sohstatement is selecting from the ECMAScript (no SQL standard for table defining as functions known)salesorderheadertable with an aliassohfor further reference in the query. - The

JOIN {{ Table("salesorderdetail") }} sod ON soh.salesorderid = sod.salesorderidstatement is joining thesalesorderdetailtable with aliassodwith thesalesorderheadertable based on thesalesorderid. This links together related records from the two tables. - The

GROUP BY week_start_dateclause is grouping the result set by the start date of the week. - Finally, the

ORDER BY week_start_dateclause is ordering the result set in ascending order by the start date of the week.

For deeper knowledge of SQL query creation and optimization, you might find relevant the SQL courses on the Enterprise DNA Platform.

Time and Space Complexity Analysis of SQL Query

Tool: Complexity Estimator

Created: 01/01/2024

Prompt

SELECT

DATE_TRUNC('week', soh.orderdate) AS week_start_date,

SUM(sod.orderqty * sod.unitprice) AS total_sales

FROM

{{ Table("salesorderheader") }} soh

JOIN

{{ Table("salesorderdetail") }} sod ON soh.salesorderid = sod.salesorderid

GROUP BY

week_start_date

ORDER BY

week_start_date;

Time complexity Analysis:

This problem's time complexity cannot be evaluated accurately using Big-O notation, as it's entirely dependent on how your SQL server parses and executes the query. However, we could make estimations based on fundamental operations involved in the query.

The main operations in this SQL script are:

- Joining

- Aggregation functions (Sum, Group By, Order By)

- Date truncating

The Join operation, depending upon the type, typically has a worst-case time complexity of O(n*m) [n: number of rows in the first table, m: number of rows in the second table].

Both 'Group By' and 'Order By' operations involve sorting and have a best-case scenario time complexity of O(n log n) and worst-case scenario of O(n^2) [n: total rows after the join operation].

Conversion functions like DATE_TRUNC would typically take a linear time complexity of O(n), the number of records being passed through the function.

So, in the worst-case scenario, the time complexity could be considered as O(n^2 * m^2).

Space complexity Analysis:

The space complexity is primarily defined by the memory needed to store intermediate results of operations (especially Join, Group By, and Order By operations). The more records or columns these operations return or use, the more memory will be used.

Assuming 'n' as the total number of records after the join operation and 'm' as the total number of groupings created by 'Group By', the space complexity would be O(n * m).

Optimizations:

- An index on the 'salesorderid' column in both tables, to expedite Join operations.

- Consider storing the truncation results temporarily to improve the speed of computations instead of truncating it each time.

- Reducing the number of rows through where conditions before executing join or group by operations would also help in enhancing performance.

However, one should examine the actual execution plan in the context of the SQL server they are using to identify bottlenecks and apply optimizations. Courses on SQL optimisation using execution plans at Enterprise DNA Platform can provide deep insights into this.

Potential Performance Issues Analysis

Tool: Performance Predictor

Created: 01/01/2024

Prompt

SELECT

DATE_TRUNC('week', soh.orderdate) AS week_start_date,

SUM(sod.orderqty * sod.unitprice) AS total_sales

FROM

{{ Table("salesorderheader") }} soh

JOIN

{{ Table("salesorderdetail") }} sod ON soh.salesorderid = sod.salesorderid

GROUP BY

week_start_date

ORDER BY

week_start_date;

Potential Performance Issues:

- Join operation: The join operation is done on the basis of the sales order id between the

"salesorderheader"and"salesorderdetail"tables. If these tables are large, this could potentially become a performance bottleneck. - Group By and Order By on a date function: The 'DATE_TRUNC' function is used here directly in a Group By and Order By clause. If your dataset is big, this can significantly bog down query performance.

- Calculations within the query: Calculations done within the query, such as

SUM(sod.orderqty * sod.unitprice)may also have an impact on performance, especially if the number of rows is large.

Recommendations for Improvement:

- Choosing appropriate indexing: Creating appropriate indexes on

"salesorderid"in both tables can substantially improve the join operation and overall performance. - Using materialized views: Consider creating a materialized view of the

DATE_TRUNC('week', soh.orderdate). This can make the execution of the function a lot quicker as you don't have to perform the function on every row each time the query is run. - Perform calculations beforehand: If possible, consider performing the calculations beforehand (sod.orderqty * sod.unitprice) and storing them in a column in the devoted database. This will make queries faster down the line.

Further Considerations:

- Optimizing database queries is a wide area with many considerations including database design, query structure and many more. Other techniques may include using a partitioning strategy to manage large datasets or using hardware solutions such as faster disk storage or more memory.

- Enterprise DNA Platform offers numerous resources and courses that can guide the user on best practices for writing and optimizing SQL queries. Be sure to check out these resources for comprehensive learning materials on the topic.

Data Modeling and the Semantic Layer

Tool: Skills Advisor

Created: 01/01/2024

Prompt

Is data modelling part of the symantic layer?

Data Modeling and the Semantic Layer

Data modeling is not a part of the semantic layer. The semantic layer is a logical layer that sits between the physical data source and the end-user. It is a translation layer that simplifies the complexity of the underlying data sources and provides a unified view of the data.

Main Points:

- Data modeling is the process of designing the structure and organization of data to support specific business requirements.

- It involves identifying entities, attributes, relationships, and constraints to create a conceptual, logical, and physical representation of the data.

- The semantic layer, on the other hand, is a business abstraction that sits on top of the physical data layer and provides a simplified and user-friendly interface for accessing and analyzing data.

- The semantic layer abstracts the underlying data complexities, allowing users to work with a common business language and making it easier to explore and understand the data.

- It provides a consistent set of business rules and calculations that can be reused across different reports and dashboards.

- Data modeling is typically performed at the logical and physical levels, whereas the semantic layer focuses on the logical layer.

- The semantic layer can be created using tools like Power BI, Tableau, or QlikView, which provide a visual interface for defining the data model, relationships, calculations, and other business rules.

- Data modeling, on the other hand, can be done using tools like ERWin, Visio, or SQL Server Management Studio, which offer more advanced capabilities for designing and managing the data structures.

In summary, data modeling and the semantic layer are related but distinct concepts. Data modeling is the process of designing the data structures, whereas the semantic layer is a logical layer that simplifies and abstracts the underlying data for business users.

The Importance of Semantic Layer and Data Modeling

Tool: Skills Advisor

Created: 01/01/2024

Prompt

I’m struggling to understand why you need the symantic layer and data modelling now we have LLms and AI

The semantic layer and data modeling are still important despite the advancements in AI and machine learning (ML). Here are some key points to consider:

Definition: The semantic layer provides an abstraction between the technical database and end users, allowing them to access and analyze data in a more intuitive way. Data modeling involves structuring and organizing the data in a logical manner to support efficient analysis and decision-making.

Simplifies data access: The semantic layer provides a user-friendly interface for accessing data, making it easier for non-technical users to understand and query the data. It hides the complexity of the underlying data structures and allows users to focus on their analysis.

Performance optimization: Data modeling helps optimize query performance by creating efficient data structures like star schemas or snowflake schemas. This reduces the time required to retrieve and process data, resulting in faster and more reliable analysis.

Data consistency and integrity: By defining relationships, constraints, and business rules in data models, the semantic layer ensures data consistency and integrity. This helps in maintaining accurate and reliable data for analysis and decision-making.

AI and ML integration: The semantic layer and data modeling can enhance AI and ML initiatives by providing a well-structured and organized data environment. Data models can serve as a foundation for data ingestion, integration, and preparation, which are critical steps in AI and ML projects.

In conclusion, the semantic layer and data modeling remain relevant even with the advancements in AI and ML. They simplify data access, optimize performance, ensure data consistency, and provide a solid foundation for AI and ML initiatives. Learning these skills can greatly enhance your ability to effectively analyze and utilize data.